Generative adversarial networks (GANs)

Picture from here.

I came across Generative adversarial networks (GANs) recently.

These look really interesting as it's a hybrid approach for machine learning using both generative and discriminative learning at the same time.

This is the abstract from the paper:

Generative Adversarial Networks

(Submitted on 10 Jun 2014)

We propose a new framework for estimating generative models via an adversarial process, in which we simultaneously train two models: a generative model G that captures the data distribution, and a discriminative model D that estimates the probability that a sample came from the training data rather than G. The training procedure for G is to maximize the probability of D making a mistake. This framework corresponds to a minimax two-player game. In the space of arbitrary functions G and D, a unique solution exists, with G recovering the training data distribution and D equal to 1/2 everywhere. In the case where G and D are defined by multilayer perceptrons, the entire system can be trained with backpropagation. There is no need for any Markov chains or unrolled approximate inference networks during either training or generation of samples. Experiments demonstrate the potential of the framework through qualitative and quantitative evaluation of the generated samples.

Here's the explanation from this blog:

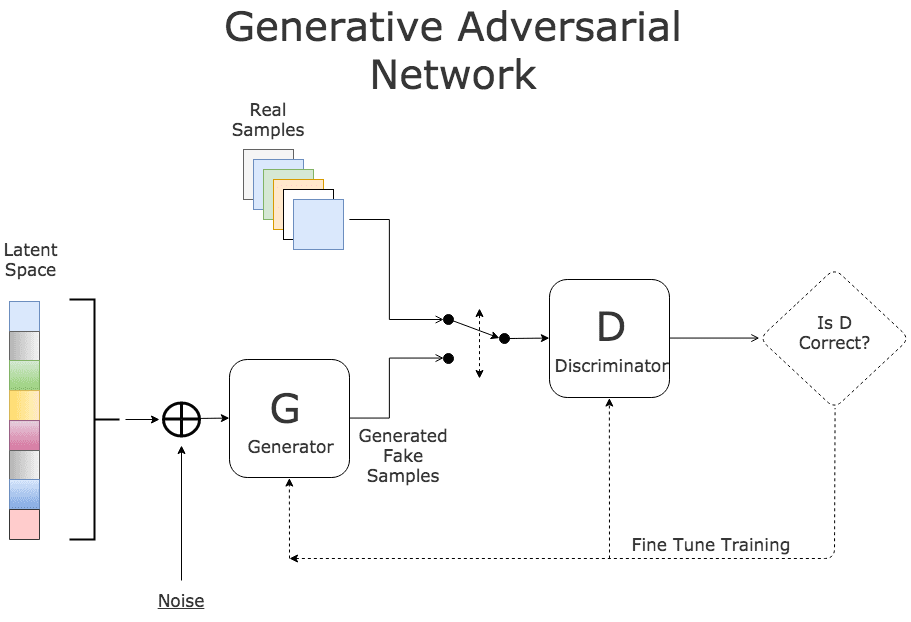

The models play two distinct (literally, adversarial) roles. Given some real data set R, G is the generator, trying to create fake data that looks just like the genuine data, while D is the discriminator, getting data from either the real set or G and labeling the difference. Goodfellow’s metaphor (and a fine one it is) was that G was like a team of forgers trying to match real paintings with their output, while D was the team of detectives trying to tell the difference. (Except that in this case, the forgers G never get to see the original data — only the judgments of D. They’re like blind forgers.)

And this explanation from the blog the picture above was from:

As an example, consider an image classifier D designed to identify a series of images depicting various animals. Now consider an adversary (G) with the mission to fool D using carefully crafted images that look almost right but not quite. This is done by picking a legitimate sample randomly from training set (latent space) and synthesizing a new image by randomly altering its features (by adding random noise). As an example, G can fetch the image of a cat and can add an extra eye to the image converting it to a false sample. The result is an image very similar to a normal cat with the exception of the number of eye.

The trick seems to be the ability of the Generator to produce lots of negative examples (which is are close in the positive examples). This is interesting as one of the machine learning algorithms I developed in the 80's was designed for a similar case where there were insufficient negative examples to learn complex concepts. Similar perhaps to one of the approaches I used was to generate synthetic data (which was actually all I could do because my target concepts were synthetic and I had to generate the worlds and examples automatically before/during learning).

As it's more an ML architecture rather than an algorithm, I wonder if it work for other algorithms? WIth more than 2 algorithms at once? I.e. why stop with 2 opponents? Why not 10? 100? More? Multiple Generative algorithms competing even?

Some improvements to GANs.

And an example for generative art using improved WGAN algorithm (TODO Try).

Comments

Post a Comment