Chapter 12: Security on AWS (Part 4)

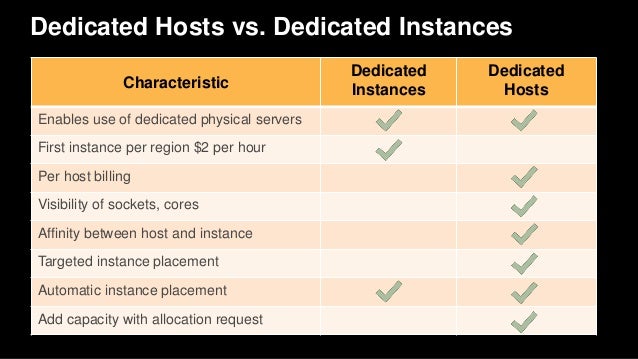

Dedicated Instances and Dedicated Hosts

Dedicated instances are possible. But why? If AWS is so sure that the VM isolation is so good what does this add? Why do they offer dedicated instances? Maybe marketing and auditing?

And how are dedicated instances any different/better than say other cloud vendors bare metal instances? Price? Performance? Security?

And then there's dedicated Hosts as well (which the book doesn't mention in this chapter odd)

Are there any security differences? Nope. I guess there are architectural, licensing (for O/S?) and price differences: from the docs;

There are no performance, security, or physical differences between Dedicated Instances and instances on Dedicated Hosts. However, Dedicated Hosts give you additional visibility and control over how instances are placed on a physical server.

When you use Dedicated Hosts, you have control over instance placement on the host using the Host Affinity and Instance Auto-placement settings. With Dedicated Instances, you don't have control over which host your instance launches and runs on. If your organization wants to use AWS, but has an existing software license with hardware compliance requirements, this allows visibility into the host's hardware so you can meet those requirements.

For more information about the differences between Dedicated Hosts and Dedicated Instances, see Amazon EC2 Dedicated Hosts.

Amazon CloudFront Security

Security for a CDN is perhaps a slightly odd notion, after all isn't the point to provide cached copies of data to the public internet? There is a private content feature. It also supports "geo restriction" (i.e. only people on the moon can read this blog!) There are "Origin access identities" and distributions.

Storage - AWS S3 Security

A few odd things: Pre-signed URL, Same origin policy and Cross-Origin resource sharing.Pre-signed URLs are for sharing S3 objects with others for a limited time. Why a limited time? Not sure.

You can also use Pre-signed URLs to allow someone else to upload S3 objects (is there a time limit for this? Not sure).

And a blog on pre-signed URLs with SSE (server side encryption).

And also pre-signed URLs for CloudFront (not mentioned in the section on CloudFront above in the book?)

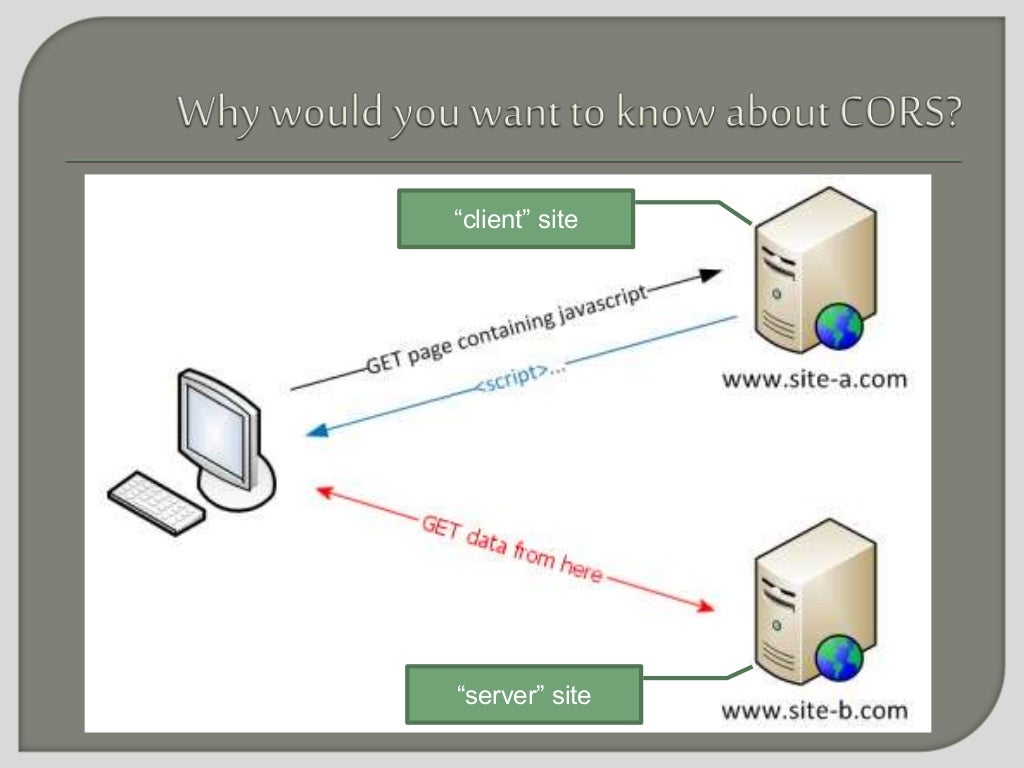

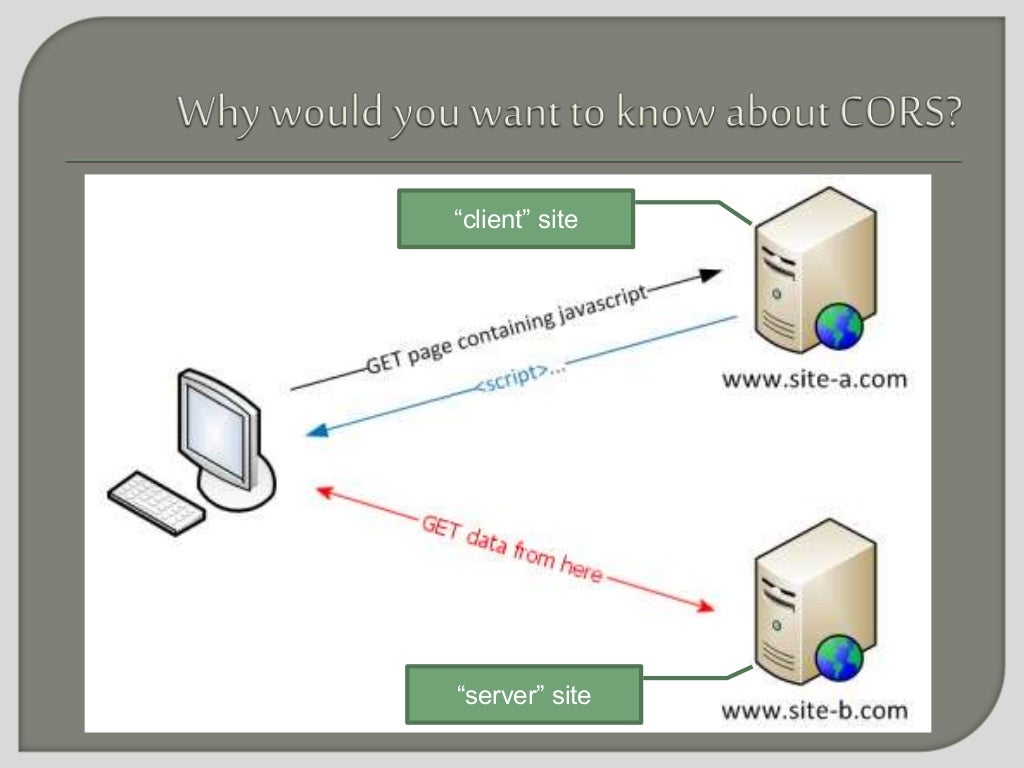

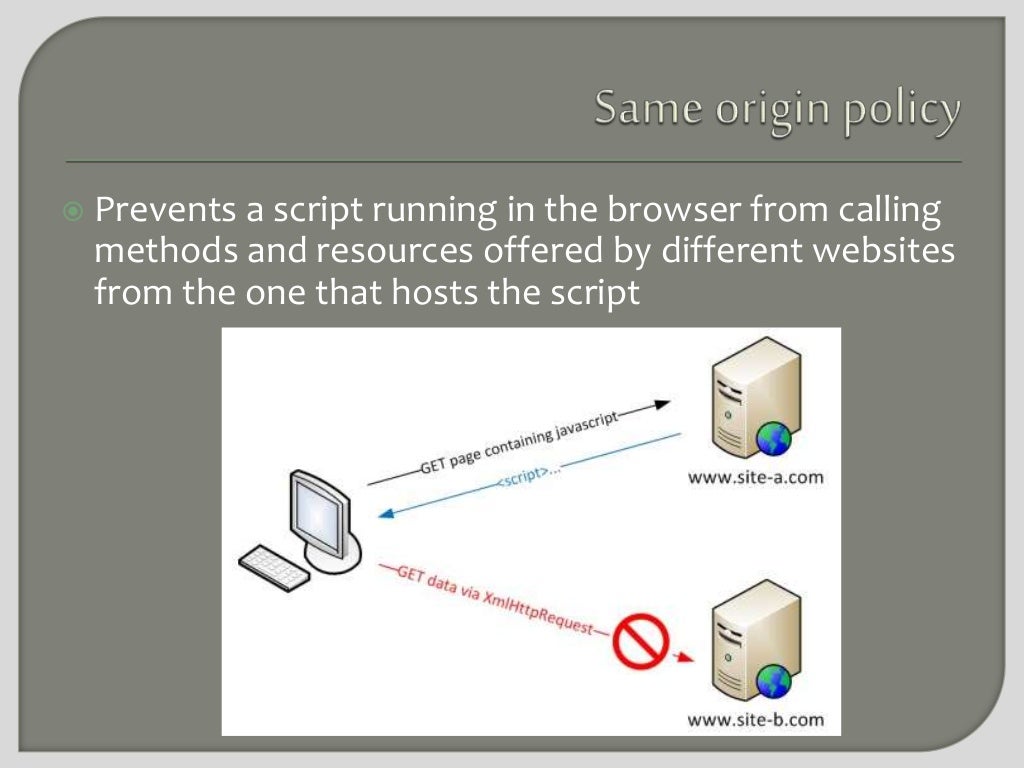

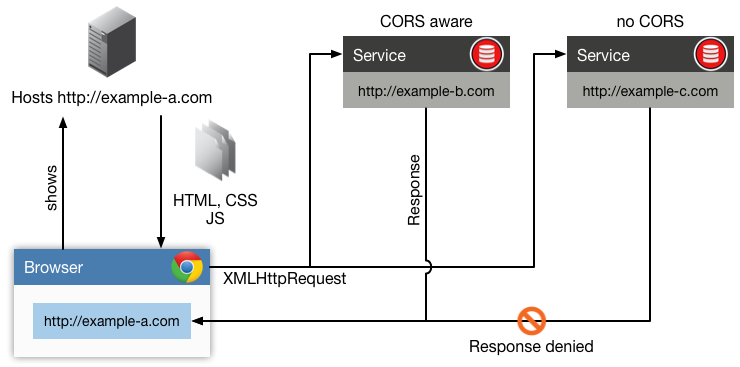

What's the same origin policy?

Seems to be a completely client side/browser policy.

And also pre-signed URLs for CloudFront (not mentioned in the section on CloudFront above in the book?)

What's the same origin policy?

Seems to be a completely client side/browser policy.

And an explanation from computer scientists at Stanford from a WWW 2006 paper:

Through a variety of means, including a range of browser cache methods and inspecting the color of a visited hyperlink, client-side browser state can be exploited to track users against their wishes. This tracking is possible because persistent, client-side browser state is not properly partitioned on per-site basis in current browsers. We address this problem by refining the general notion of a “same-origin” policy and implementing two browser extensions that enforce this policy on the browser cache and visited links. We also analyze various degrees of cooperation between sites to track users, and show that even if long-term browser state is properly partitioned, it is still possible for sites to use modern web features to bounce users between sites and invisibly engage in cross-domain tracking of their visitors. Cooperative privacy attacks are an unavoidable consequence of all persistent browser state that affects the behavior of the browser, and disabling or frequently expiring this state is the only way to achieve true privacy against colluding parties.

And a more recent analysis of Cross-origin resource sharing (CORS).

You can set this on an S3 bucket, but I thought it was a client side thing?

Here's the associated W3C recommendation for CORS.

So CORS is an html5 feature thingy and does require server support.

A good simple explanation:

Through a variety of means, including a range of browser cache methods and inspecting the color of a visited hyperlink, client-side browser state can be exploited to track users against their wishes. This tracking is possible because persistent, client-side browser state is not properly partitioned on per-site basis in current browsers. We address this problem by refining the general notion of a “same-origin” policy and implementing two browser extensions that enforce this policy on the browser cache and visited links. We also analyze various degrees of cooperation between sites to track users, and show that even if long-term browser state is properly partitioned, it is still possible for sites to use modern web features to bounce users between sites and invisibly engage in cross-domain tracking of their visitors. Cooperative privacy attacks are an unavoidable consequence of all persistent browser state that affects the behavior of the browser, and disabling or frequently expiring this state is the only way to achieve true privacy against colluding parties.

And a more recent analysis of Cross-origin resource sharing (CORS).

You can set this on an S3 bucket, but I thought it was a client side thing?

Here's the associated W3C recommendation for CORS.

So CORS is an html5 feature thingy and does require server support.

A good simple explanation:

DynamoDB Security

Mentions HMAC-SHA-256 signatures. What's this? Maybe SHA256? an example hash function?

Amazon RDS

Mentions bastion hosts. Never heard of them. What are they?

A bastion host is a server whose purpose is to provide access to a private network from an external network, such as the Internet. Because of its exposure to potential attack, a bastion host must minimize the chances of penetration. For example, you can use a bastion host to mitigate the risk of allowing SSH connections from an external network to the Linux instances launched in a private subnet of your Amazon Virtual Private Cloud (VPC).

However, it seems that they are VPC specific not RDS? Also hard to find definitive docs.

And another blog. However, my suspicion is that all this stuff only really makes sense to a network/security administrator in practice.

Encryption: RDS supports Transparent Data Encryption (TDE) for SQL servers. TDE automatically encrypts data before it is written to storage and automatically decrypts data when it is read from storage.

Amazon RDS also supports encrypting an Oracle or SQL Server DB instance with Transparent Data Encryption (TDE). TDE can be used in conjunction with encryption at rest, although using TDE and encryption at rest simultaneously might slightly affect the performance of your database. You must manage different keys for each encryption method.

RDS scale compute operations?

The only maintenance events that require Amazon RDS to take your DB instance offline are scale compute operations (which generally take only a few minutes from start-to-finish) or required software patching. p338

or compute scaling (scale compute maybe an error?)

You can scale the compute and memory resources powering your deployment up or down, up to a maximum of 32 vCPUs and 244 GiB of RAM. Compute scaling operations typically complete in a few minutes.

Redshift Security

IPsec VPN to connect Redshift in a VPC with your own network. What's this?

Q. How does a hardware VPN connection work with Amazon VPC?

A hardware VPN connection connects your VPC to your datacenter. Amazon supports Internet Protocol security (IPsec) VPN connections. Data transferred between your VPC and datacenter routes over an encrypted VPN connection to help maintain the confidentiality and integrity of data in transit. An Internet gateway is not required to establish a hardware VPN connection.

Q. What is IPsec?

IPsec is a protocol suite for securing Internet Protocol (IP) communications by authenticating and encrypting each IP packet of a data stream.

By default, instances that you launch into a virtual private cloud (VPC) can't communicate with your own network. You can enable access to your network from your VPC by attaching a virtual private gateway to the VPC, creating a custom route table, and updating your security group rules.

...

Although the term VPN connection is a general term, in the Amazon VPC documentation, a VPN connection refers to the connection between your VPC and your own network. AWS supports Internet Protocol security (IPsec) VPN connections.

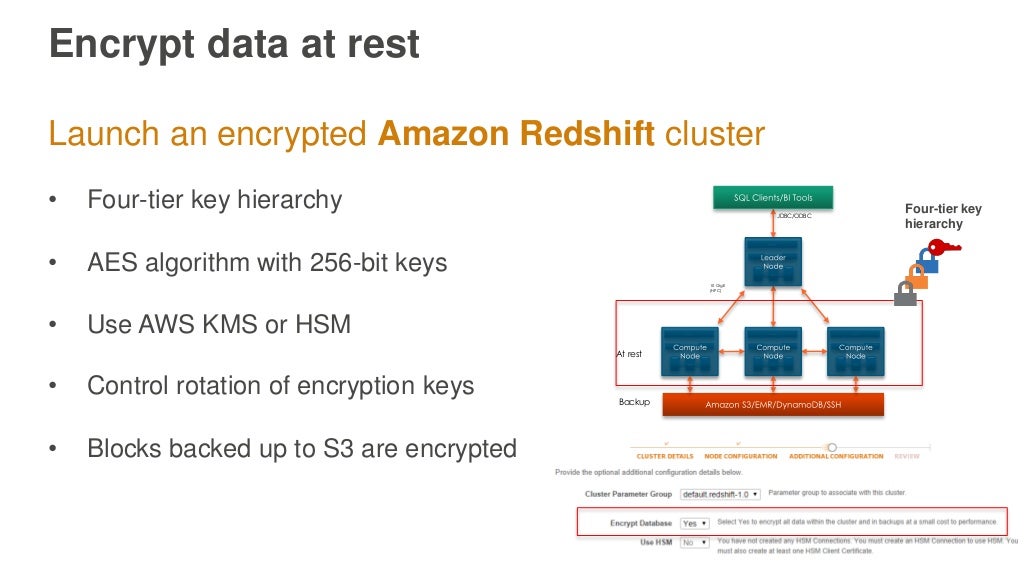

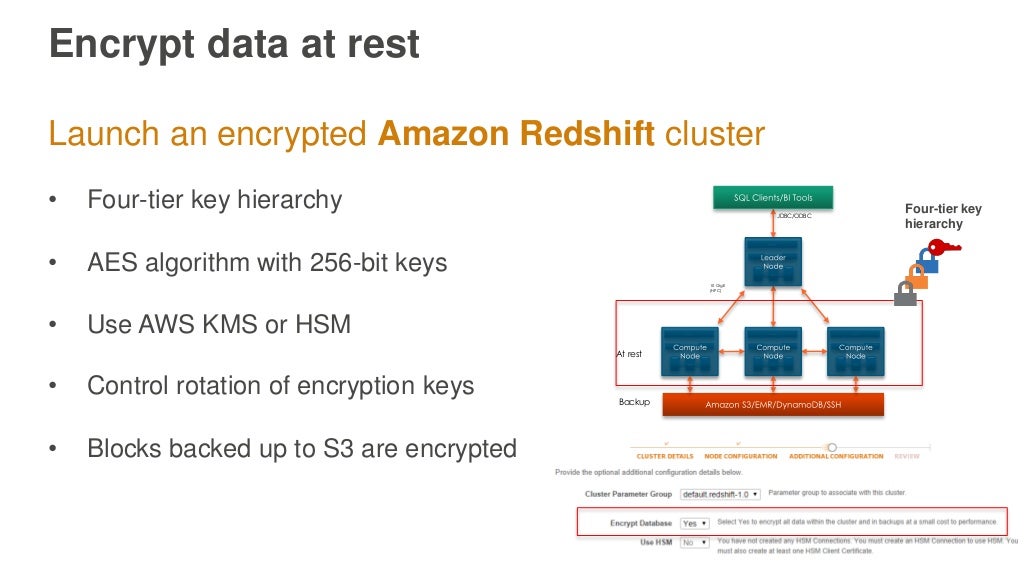

Redshift uses a four-tier key-based architecture for encryption, Why 4??? Is this really enough? If four tiers is good surely five is better :-? It's tricky to find other references to four-tier encyrption. Is this only an AWS thing? There is a paper on a four-tier architecture.

Oh, and can't believe that one of the certification exam questions is how many tiers does the Redshift encryption architecture use (1, 2, 3, 4)! Talk about pub trivia quiz night. Maybe they should ask: Why does it matter how many tiers Redshift uses for encryption?

I think this is just an example of multiple encryption, may be better to explain it in this context.

And another question. If a four-tier key-based architecture for encryption is good enough for Redshift why isn't it used for other Amazon databases? Maybe the answer is that this is just the logical encryption architecture required for the Redshift architecture - i.e. it just follows from the Redshift cluster MPP (Massively Parallel Processing) architecture?! YES. This is the correct answer from the following paper (my tier numbering).

This is a good paper motivating the Redshift architecture but doesn't mention the four-tier security aspect explicitly, but does explain it well.

Anurag Gupta, Deepak Agarwal, Derek Tan, Jakub Kulesza, Rahul Pathak, Stefano Stefani, and Vidhya Srinivasan. 2015. Amazon Redshift and the Case for Simpler Data Warehouses. In Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data (SIGMOD '15). ACM, New York, NY, USA, 1917-1923. DOI: http://dx.doi.org/10.1145/2723372.2742795

Encryption is similarly straightforward. Enabling encryption requires setting a checkbox in our console and, optionally, specifying a key provider such as a hardware security module (HSM).

(Tier-4) Under the covers, we generate block-specific encryption keys (to avoid injection attacks from one block to another),

(Tier-3) wrap these with cluster-specific keys (to avoid injection attacks from one cluster to another),

(Tier-2) and further wrap these with a master key,

(Tier-1) stored by us off-network or via the customer-specified HSM.

All user data, including backups, is encrypted. Key rotation is straightforward as it only involves re-encrypting block keys or cluster keys, not the entire database. Repudiation is equally straightforward, as it only involves losing access to the customer’s key or re-encrypting all remaining valid cluster keys with a new master. We also benefit from security features in the core AWS platform. For example, we use Amazon VPC to provide network isolation of the compute nodes providing cluster storage, isolating them from general-purpose access from the leader node, which is accessible from the customer’s VPC.

So, the 4-tier architecture is really just: (Tier-1) master key store off-network, (Tier-2) master key wraps cluster keys (Tier-3) cluster keys wrap block key, (Tier-4) block keys wrap data. The design is to prevent "attacks" within (e.g. block to block, cluster to cluster) tiers. How about between (e.g. master to cluster, cluster to blocks) tiers?

I get the above explanation better the current documents which explain it as follows.

This is a good paper motivating the Redshift architecture but doesn't mention the four-tier security aspect explicitly, but does explain it well.

Anurag Gupta, Deepak Agarwal, Derek Tan, Jakub Kulesza, Rahul Pathak, Stefano Stefani, and Vidhya Srinivasan. 2015. Amazon Redshift and the Case for Simpler Data Warehouses. In Proceedings of the 2015 ACM SIGMOD International Conference on Management of Data (SIGMOD '15). ACM, New York, NY, USA, 1917-1923. DOI: http://dx.doi.org/10.1145/2723372.2742795

Encryption is similarly straightforward. Enabling encryption requires setting a checkbox in our console and, optionally, specifying a key provider such as a hardware security module (HSM).

(Tier-4) Under the covers, we generate block-specific encryption keys (to avoid injection attacks from one block to another),

(Tier-3) wrap these with cluster-specific keys (to avoid injection attacks from one cluster to another),

(Tier-2) and further wrap these with a master key,

(Tier-1) stored by us off-network or via the customer-specified HSM.

All user data, including backups, is encrypted. Key rotation is straightforward as it only involves re-encrypting block keys or cluster keys, not the entire database. Repudiation is equally straightforward, as it only involves losing access to the customer’s key or re-encrypting all remaining valid cluster keys with a new master. We also benefit from security features in the core AWS platform. For example, we use Amazon VPC to provide network isolation of the compute nodes providing cluster storage, isolating them from general-purpose access from the leader node, which is accessible from the customer’s VPC.

So, the 4-tier architecture is really just: (Tier-1) master key store off-network, (Tier-2) master key wraps cluster keys (Tier-3) cluster keys wrap block key, (Tier-4) block keys wrap data. The design is to prevent "attacks" within (e.g. block to block, cluster to cluster) tiers. How about between (e.g. master to cluster, cluster to blocks) tiers?

I get the above explanation better the current documents which explain it as follows.

Finally the four-tier encryption details:

About Database Encryption for Amazon Redshift Using AWS KMS

About Database Encryption for Amazon Redshift Using AWS KMS

When you choose AWS KMS for key management with Amazon Redshift, there is a four-tier hierarchy of encryption keys.

These keys, in hierarchical order, are the master key, a cluster encryption key (CEK), a database encryption key (DEK), and data encryption keys.

When you launch your cluster, Amazon Redshift returns a list of the customer master keys (CMKs) that your AWS account has created or has permission to use in AWS KMS. You select a CMK to use as your master key in the encryption hierarchy.

By default, Amazon Redshift selects your default key as the master key. Your default key is an AWS-managed key that is created for your AWS account to use in Amazon Redshift. AWS KMS creates this key the first time you launch an encrypted cluster in a region and choose the default key.

If you don’t want to use the default key, you must have (or create) a customer-managed CMK separately in AWS KMS before you launch your cluster in Amazon Redshift. Customer-managed CMKs give you more flexibility, including the ability to create, rotate, disable, define access control for, and audit the encryption keys used to help protect your data. For more information about creating CMKs, go to Creating Keys in the AWS Key Management Service Developer Guide.

If you want to use a AWS KMS key from another AWS account, you must have permission to use the key and specify its ARN in Amazon Redshift. For more information about access to keys in AWS KMS, go to Controlling Access to Your Keys in the AWS Key Management Service Developer Guide.

After you choose a master key, Amazon Redshift requests that AWS KMS generate a data key and encrypt it using the selected master key. This data key is used as the CEK in Amazon Redshift. AWS KMS exports the encrypted CEK to Amazon Redshift, where it is stored internally on disk in a separate network from the cluster along with the grant to the CMK and the encryption context for the CEK. Only the encrypted CEK is exported to Amazon Redshift; the CMK remains in AWS KMS. Amazon Redshift also passes the encrypted CEK over a secure channel to the cluster and loads it into memory.

Then, Amazon Redshift calls AWS KMS to decrypt the CEK and loads the decrypted CEK into memory. For more information about grants, encryption context, and other AWS KMS-related concepts, go to Concepts in the AWS Key Management Service Developer Guide.

Next, Amazon Redshift randomly generates a key to use as the DEK and loads it into memory in the cluster.

The decrypted CEK is used to encrypt the DEK, which is then passed over a secure channel from the cluster to be stored internally by Amazon Redshift on disk in a separate network from the cluster. Like the CEK, both the encrypted and decrypted versions of the DEK are loaded into memory in the cluster. The decrypted version of the DEK is then used to encrypt the individual encryption keys that are randomly generated for each data block in the database. (the data encryption keys?)

When the cluster reboots, Amazon Redshift starts with the internally stored, encrypted versions of the CEK and DEK, reloads them into memory, and then calls AWS KMS to decrypt the CEK with the CMK again so it can be loaded into memory. The decrypted CEK is then used to decrypt the DEK again, and the decrypted DEK is loaded into memory and used to encrypt and decrypt the data block keys as needed.

Simple isn't it!

And maybe this presentation on securing Big Data.

Which has the only diagram of the Redshift 4-tier key architecture than I can find anywhere

And maybe this presentation on securing Big Data.

Which has the only diagram of the Redshift 4-tier key architecture than I can find anywhere

Nice post,and good information Thanks for sharing

ReplyDeletefurther check it once at AWS Online Training

dumpscollection.com provides authentic IT Certification exams preparation material guaranteed to make you pass in the first attempt. Download instant free demo & begin preparation. Amazon Web Services AWS Certified Specialty Exam

ReplyDeletenice blog

ReplyDeleteDecentralized VPNS